Tesla’s Machine Learning Engine: From Code to Autonomous Driving

Breaking Down How Tesla Turns Algorithms into Real-World Self-Driving Systems

You’ve probably been knee-deep in learning code and wondering, “What’s the point of all these algorithms? Where does this even apply in real life?” Well, look no further than Tesla. Every time you see one of their cars smoothly navigating the road without a driver, that’s code in action—machine learning, to be exact.

Tesla’s software architecture shows us exactly how coding and machine learning are brought to life. Today, we’ll take a deep dive into the guts of their system. It’s not just about writing code—it’s about how that code powers autonomous machines in the real world.

1. Centralized Compute Architecture

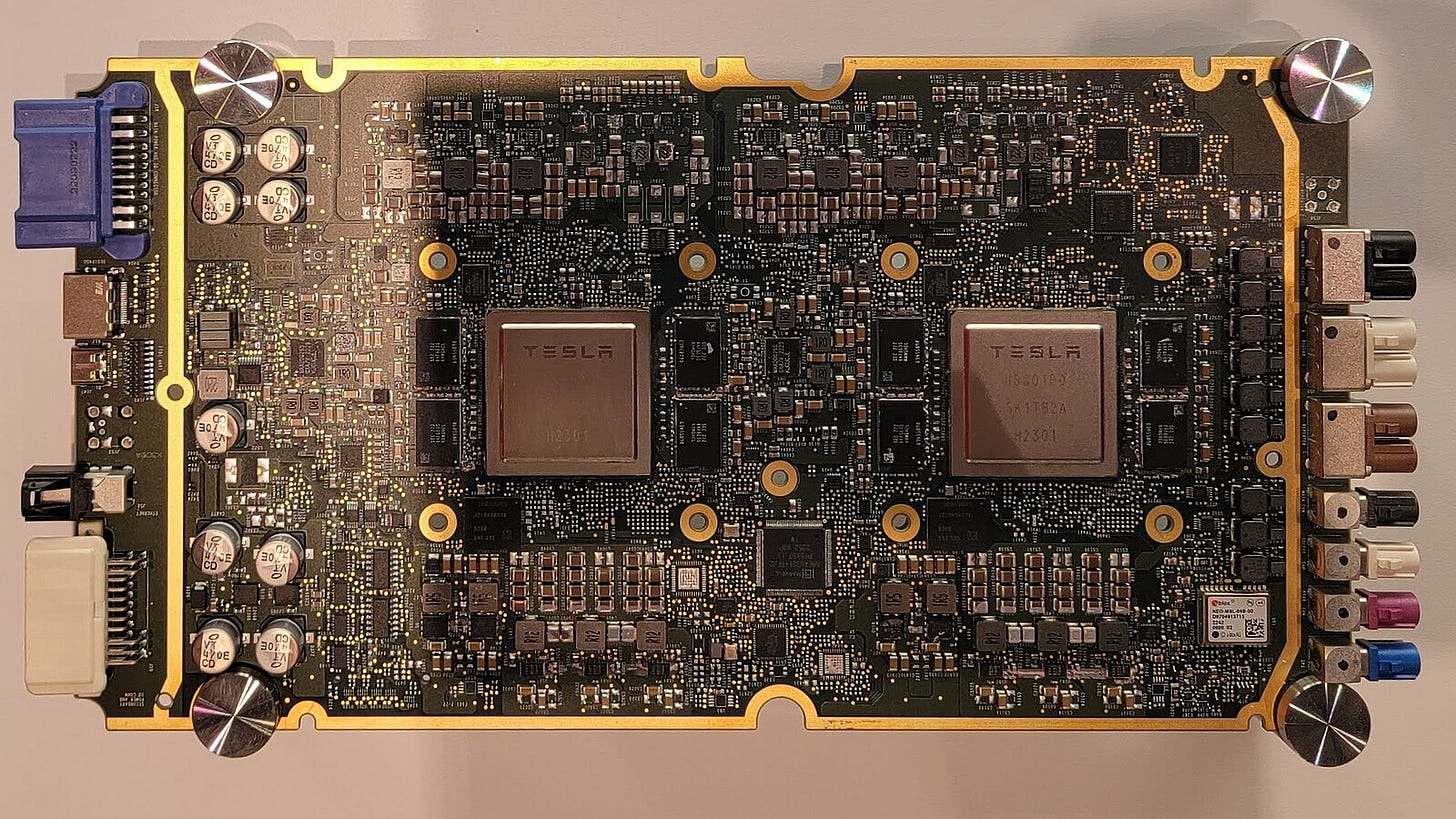

Tesla’s shift to a centralized compute system marks a pivotal change in automotive software design. Instead of relying on multiple ECUs (Electronic Control Units), Tesla consolidated computing power into a single brain—the Full Self-Driving (FSD) Computer, also known as Hardware 3.0.

Technical Breakdown:

SoC (System-on-Chip): Tesla’s FSD Computer is powered by two custom-built SoCs, each containing neural network accelerators, general-purpose GPUs, and high-performance CPUs. These SoCs allow parallel processing of tasks—such as vision processing, sensor fusion, and path planning.

Redundancy: Tesla employs a dual-redundant system, meaning two processors run in parallel to ensure failsafe operations. If one fails, the other seamlessly takes over—critical for achieving Level 5 autonomy.

This centralized setup allows for real-time communication between different systems like brakes, sensors, and motors. Instead of the fragmented data pipelines of traditional ECUs, Tesla’s architecture allows rapid data exchange between different vehicle components, drastically reducing latency.

2. Neural Networks and Computer Vision

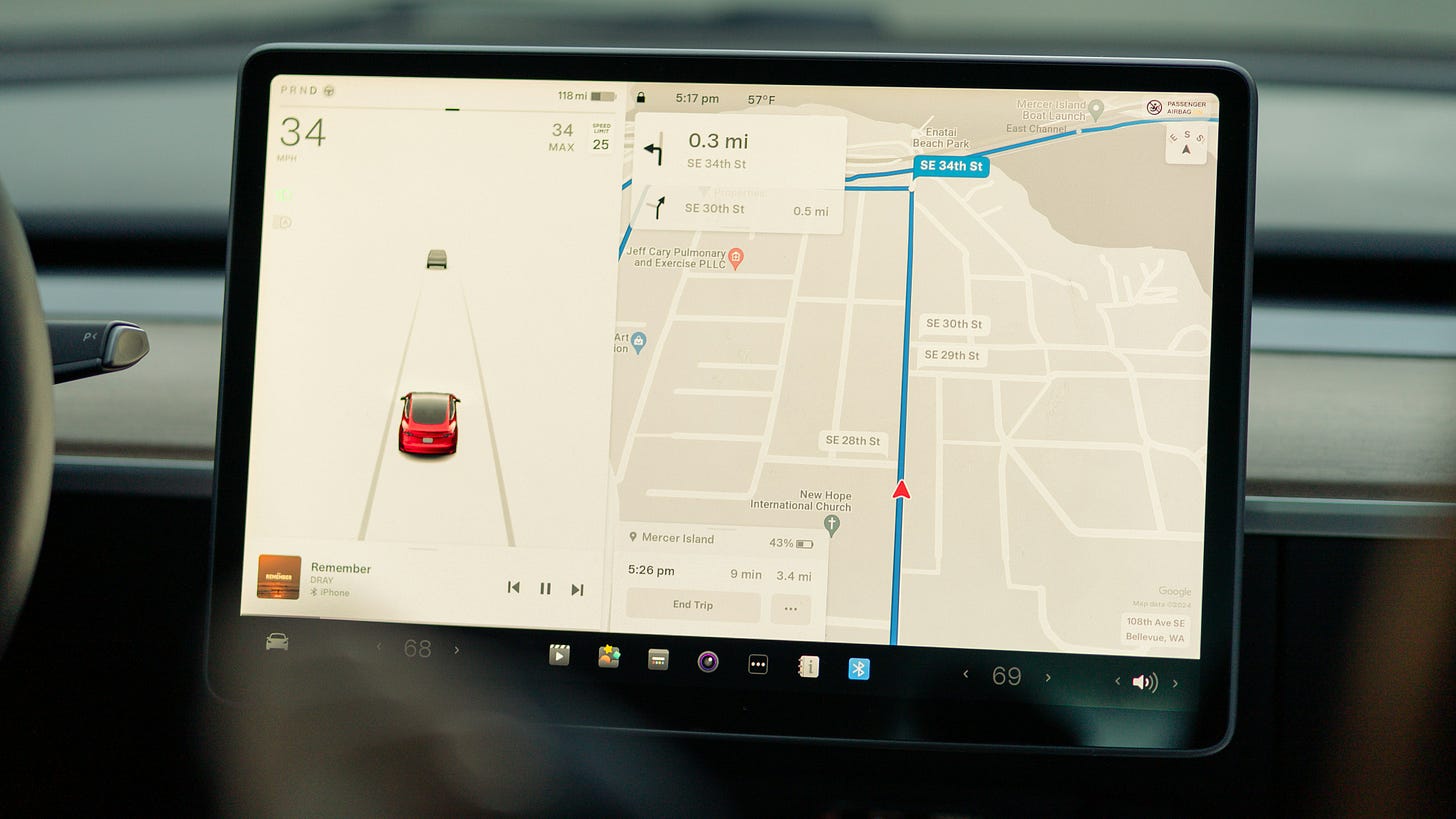

At the heart of Tesla’s self-driving capabilities is Tesla Vision, which relies on computer vision systems to understand the environment in real time. Unlike competitors that integrate LiDAR, Tesla is fully committed to using cameras and neural networks to interpret the world.

How Tesla's Vision Works:

Raw Image Processing: Tesla’s cameras provide raw video input to the Neural Net Processing Unit in the FSD Computer. This NPU processes up to 2300 frames per second, running multiple deep neural networks (DNNs) simultaneously.

Object Detection & Semantic Segmentation: These networks perform tasks like object detection (recognizing vehicles, pedestrians, traffic signs) and semantic segmentation, breaking down the visual input into categories (road, lane markings, obstacles).

Each frame is processed through convolutional layers, where feature maps are generated and then passed through dense layers to make real-time decisions. Instead of relying on hand-crafted features, these networks learn directly from the massive dataset Tesla gathers from its fleet.

Temporal Fusion: One of the most advanced aspects is Tesla’s use of temporal fusion, where multiple frames are combined over time to improve accuracy. The car essentially "remembers" past states, which allows it to predict the future behavior of objects.

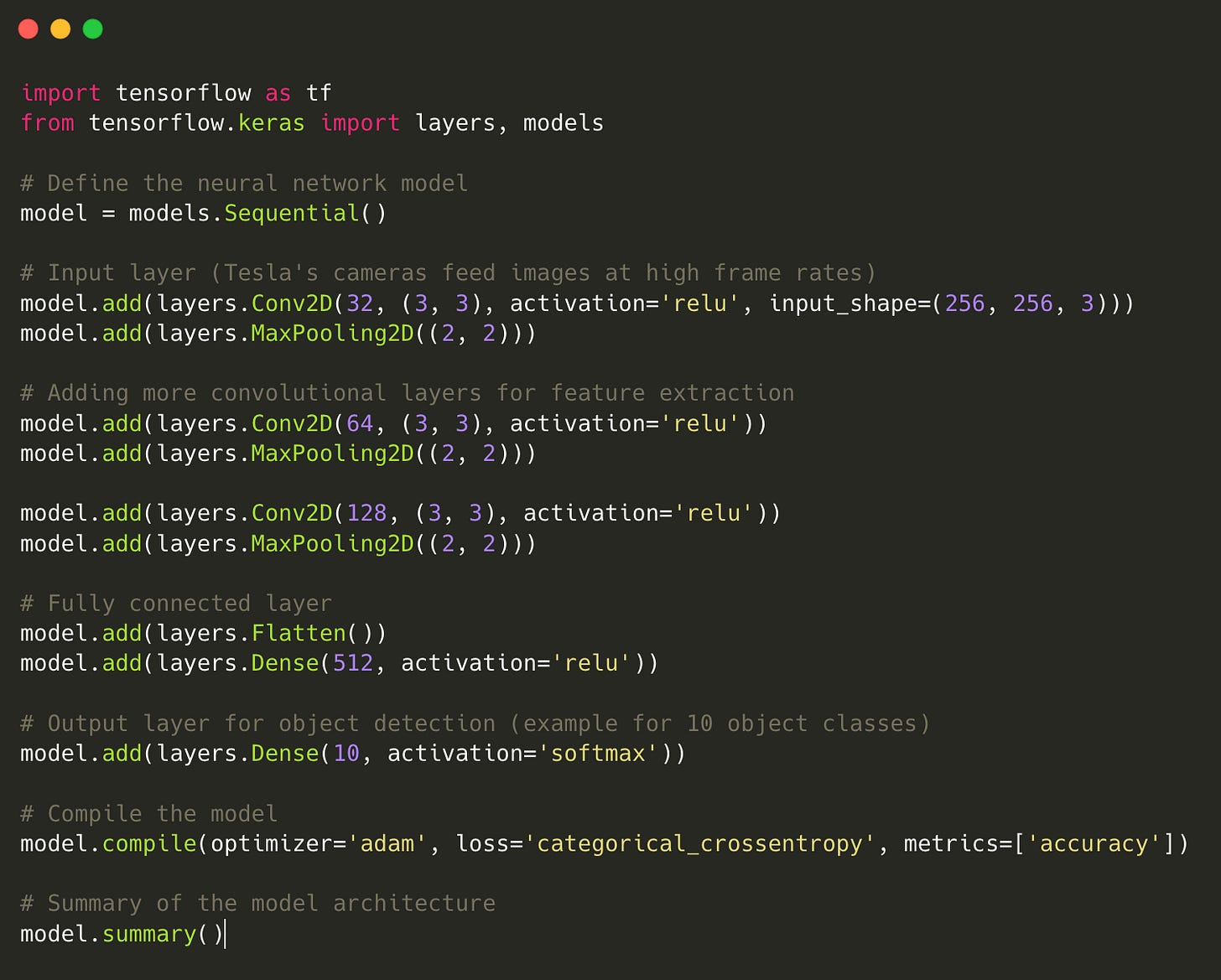

Here’s a simplified version of what Tesla’s neural network might look like when training a model to detect objects in real-time:

In the above example:

Convolutional layers (Conv2D) extract features from the camera inputs, just like Tesla’s system processing images from multiple cameras around the car.

MaxPooling layers reduce the dimensionality and complexity.

Dense layers handle the classification of objects (e.g., cars, pedestrians).

This is a high-level abstraction, but Tesla’s actual model is trained on a much larger scale with millions of data points.

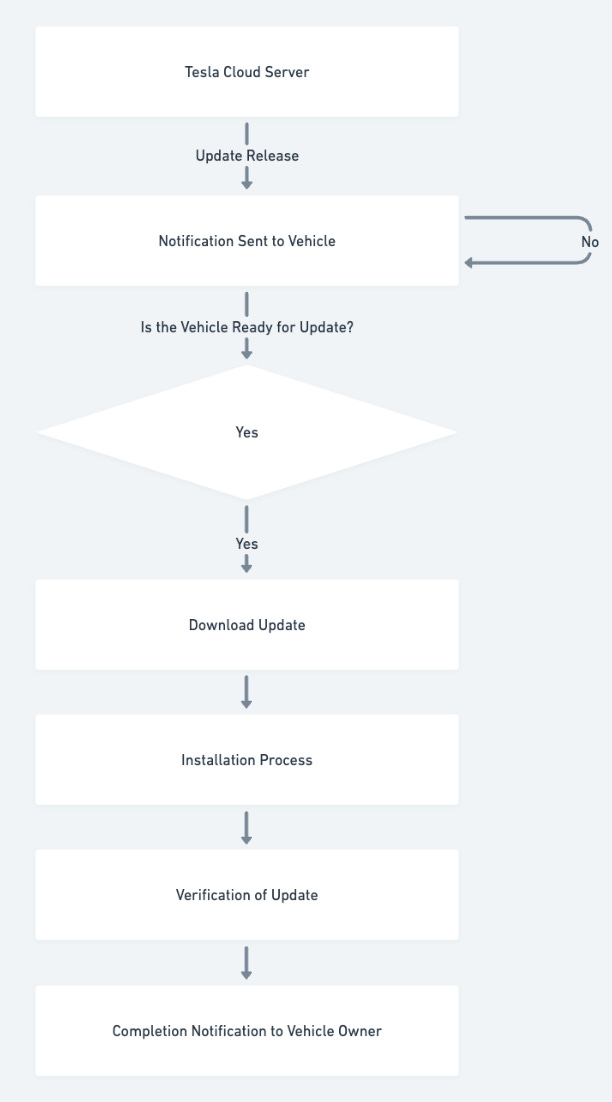

3. OTA Updates and Software Containers

Tesla’s Over-The-Air (OTA) Updates are a game-changer in the automotive world. Traditional automakers require physical recalls or service visits for updates. Tesla, however, uses a fully automated OTA system to push updates, including core system patches, new autonomous features, and even bug fixes.

Under the Hood:

Linux-Based OS: Tesla’s software runs on a Linux-based operating system. The system kernel is optimized for real-time performance, allowing critical tasks like sensor input and control algorithms to run with low latency.

Containerized Architecture: Tesla uses Docker-like containerization to isolate different components of the software stack. This allows the OTA updates to patch or restart individual software modules without disrupting the entire vehicle’s operation.

For example, updating the autonomous driving module doesn’t interfere with infotainment or energy management systems.

Here’s a simple Dockerfile for containerizing a path-planning module, demonstrating how Tesla might isolate various components of their software.

# Use Python image

FROM python:3.9-slim

# Set the working directory inside the container

WORKDIR /usr/src/app

# Copy the module code into the container

COPY . .

# Install necessary dependencies

RUN pip install --no-cache-dir -r requirements.txt

# Run the path planning script

CMD ["python", "./path_planning.py"]This container can be deployed independently, and updates can be pushed for the specific module handling path planning without interfering with other systems like infotainment.

4. Autonomous Path Planning

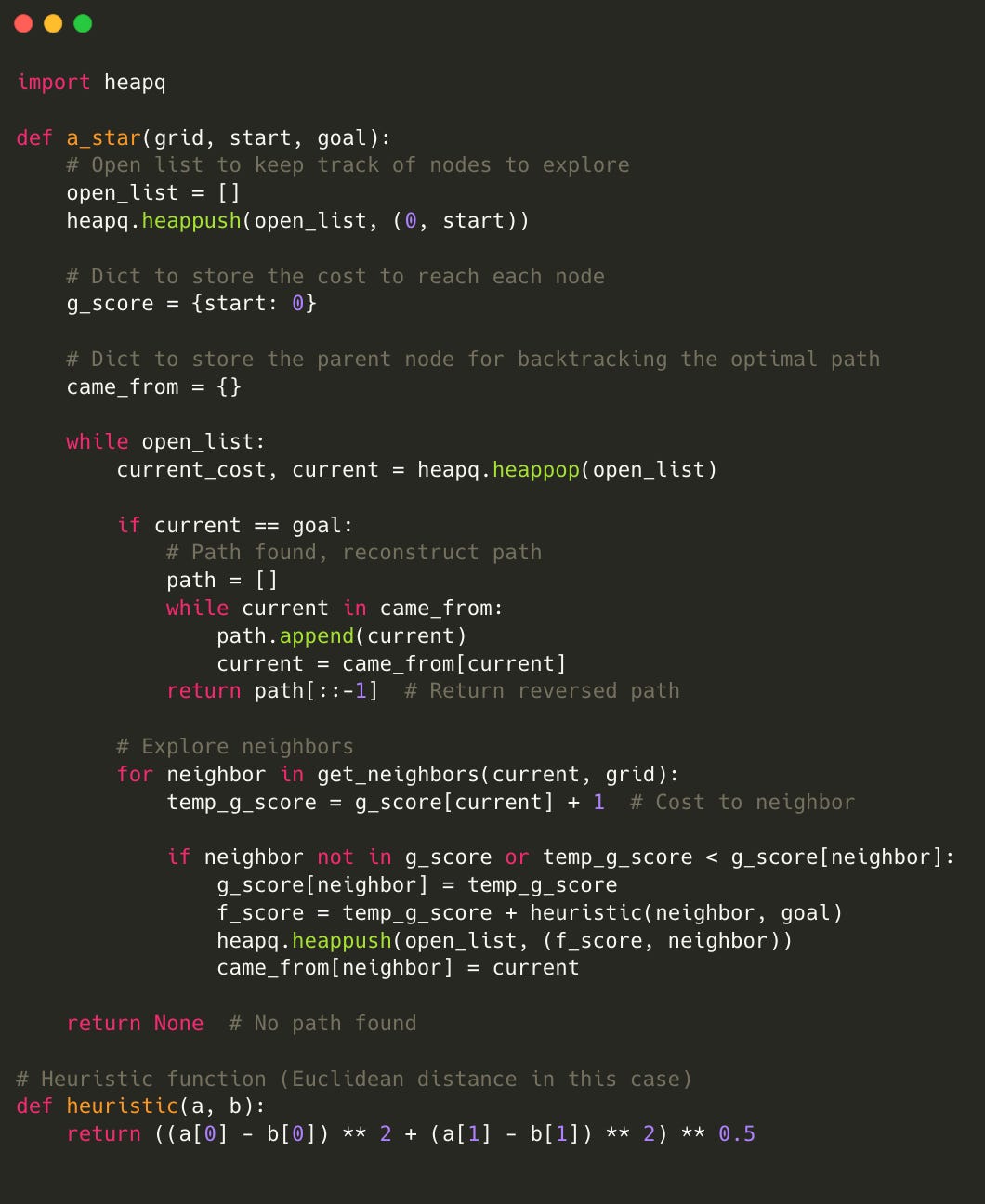

Tesla’s Full-Self Driving (FSD) system relies on complex path planning algorithms to decide how the vehicle should navigate. These algorithms handle everything from highway lane changes to city driving scenarios.

Low-Level Insights:

Cost Maps: Path planning begins by generating a cost map—a grid-based representation of the environment where each cell represents the "cost" of driving through that space. Costs are influenced by the proximity of obstacles, traffic regulations, and road geometry.

A and Dijkstra Algorithms*: The vehicle uses pathfinding algorithms like A* and Dijkstra to compute the lowest-cost route through the environment. Once the route is computed, it’s adjusted in real-time as new data flows in from the sensors.

Trajectory Optimization: After path selection, the system uses trajectory optimization algorithms to smooth out the path. This involves solving a series of nonlinear optimization problems to create a trajectory that maximizes comfort, energy efficiency, and safety.

The key here is Tesla’s ability to continuously optimize the vehicle’s route based on sensor input, recalculating the best trajectory as new obstacles or road conditions are detected.

One common algorithm for finding optimal routes in autonomous vehicles is A*. Below is a Python example of a basic A* implementation, simplified for vehicle path planning.

This basic example of the A* algorithm calculates the lowest-cost path on a grid and could be part of a larger path-planning system that Tesla uses to optimize real-time driving routes.

5. Data Collection and Training Pipeline

Tesla’s ability to gather data from its fleet provides a unique advantage in training its neural networks. Every vehicle on the road collects data in real-time, feeding back into Tesla’s servers where it can be used to refine the machine learning models.

The Flow:

Edge Data Collection: Cars collect terabytes of data daily, including video streams, sensor readings, and user interactions. Specific edge cases (like unusual road configurations or rare driving conditions) are flagged and sent back for further analysis.

Data Aggregation & Labeling: Tesla's data labeling team uses semi-supervised learning to label these datasets, sometimes using AI-assisted tools. For example, when Tesla decides to improve lane marking detection, they can rapidly pull hundreds of thousands of examples from their database for model retraining.

End-to-End Learning: Tesla’s models are trained using end-to-end learning—inputting raw data and outputting control signals for acceleration, braking, and steering. This method avoids rule-based heuristics and allows the models to generalize better.

6. Energy Optimization Algorithms

Tesla doesn’t stop at autonomy; the company uses its software prowess to maximize energy efficiency. Their energy management system optimizes battery performance in real-time.

Key Mechanisms:

Predictive Energy Usage: The system uses historical driving patterns, real-time data, and navigation routes to predict energy consumption. This helps adjust climate control, regenerative braking, and motor power distribution to optimize range.

Thermal Management: Tesla also has sophisticated thermal management algorithms that keep battery and motor temperatures in the optimal range, adjusting coolant flows and HVAC system efficiency based on real-time thermal data.

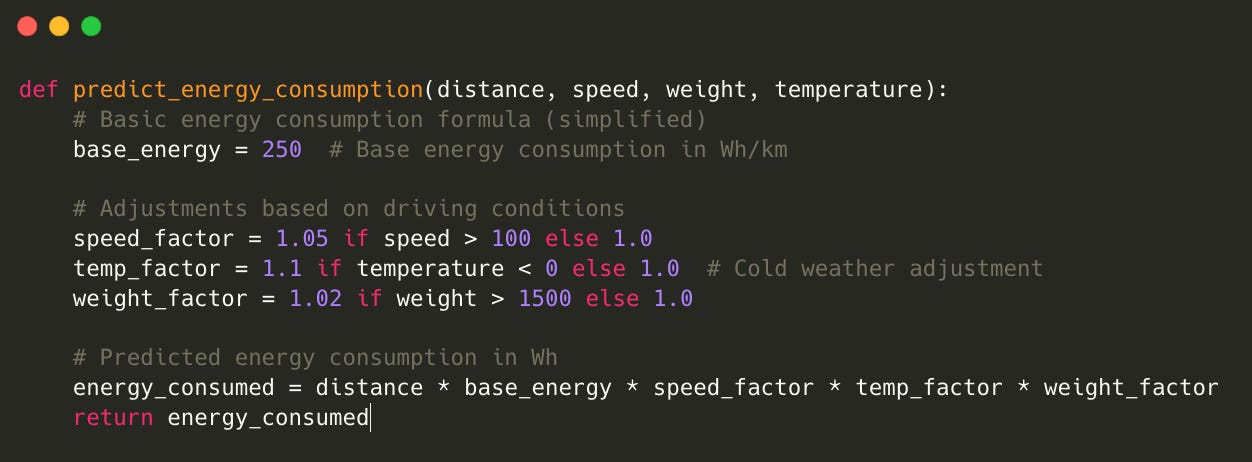

This simple function predicts energy consumption based on speed, vehicle weight, and external conditions like temperature—one of the many optimizations Tesla’s energy management software performs.

Tesla is a prime example of how code isn’t just something you write for a project or a course. It’s what turns into something tangible—like a car making decisions on its own. The path from learning to apply machine learning can seem far off, but every function, algorithm, or model you write could be the building block of something just as transformative.

So as you’re coding, remember—real-world applications, like Tesla’s, are where the magic happens. You're not just coding to learn; you're coding to create something that can change the world.